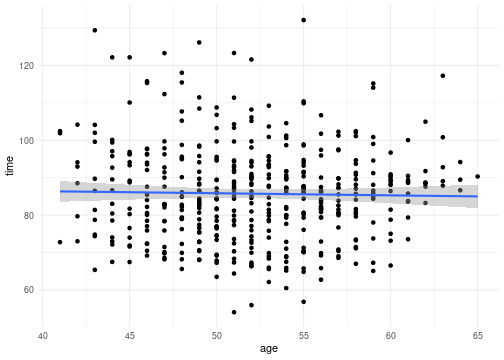

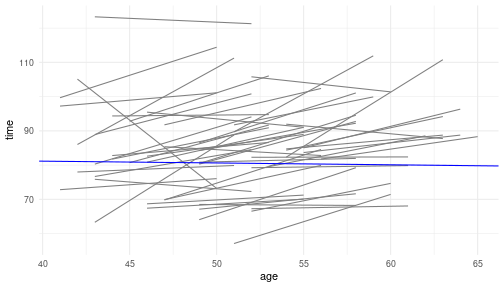

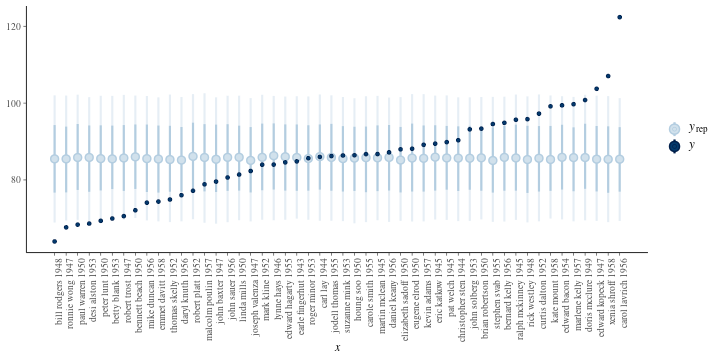

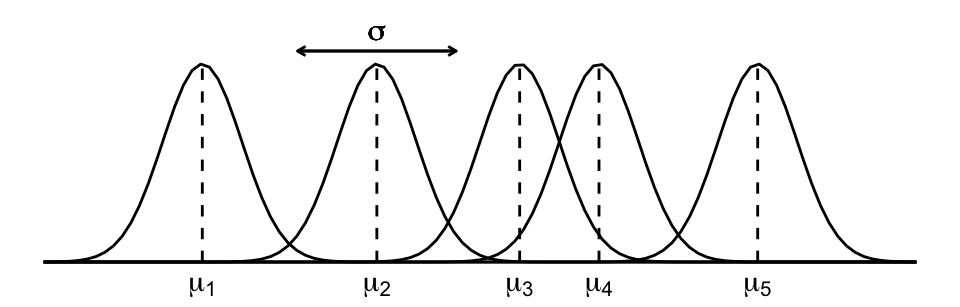

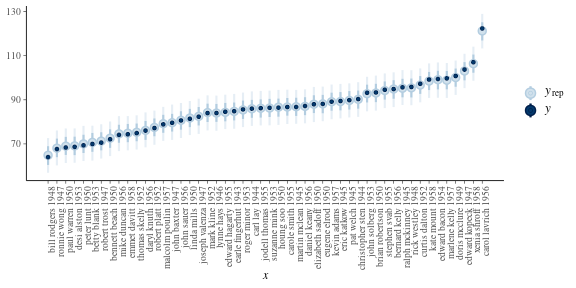

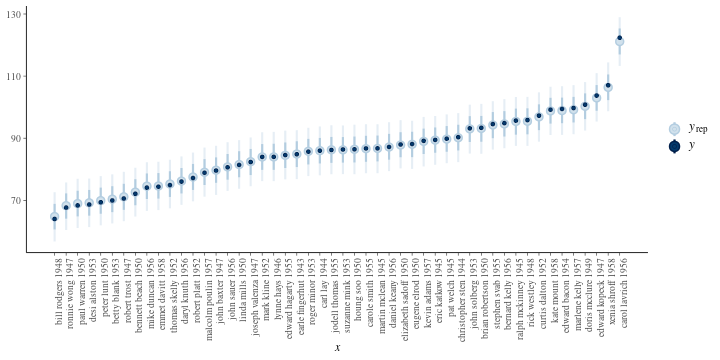

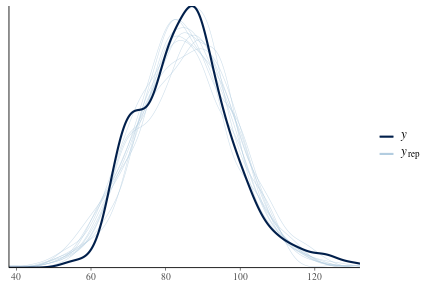

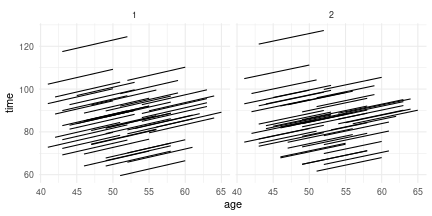

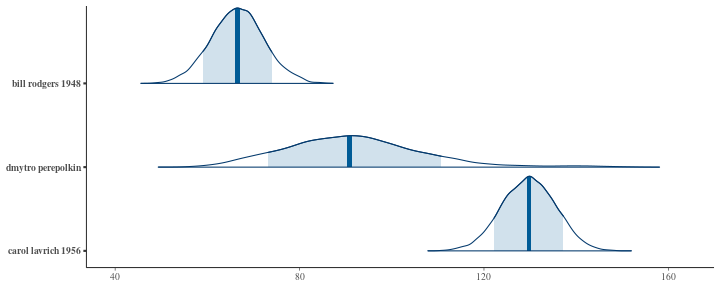

<style type="text/css"> .title-slide .remark-slide-number { display: none; } </style> <div> <style type="text/css">.xaringan-extra-logo { width: 110px; height: 128px; z-index: 0; background-image: url(fig/bl_logo.png); background-size: contain; background-repeat: no-repeat; position: absolute; top:2em;right:2em; } </style> <script>(function () { let tries = 0 function addLogo () { if (typeof slideshow === 'undefined') { tries += 1 if (tries < 10) { setTimeout(addLogo, 100) } } else { document.querySelectorAll('.remark-slide-content:not(.title-slide):not(.inverse):not(.hide_logo)') .forEach(function (slide) { const logo = document.createElement('a') logo.classList = 'xaringan-extra-logo' logo.href = 'https://www.lucs.lu.se/bayes/' slide.appendChild(logo) }) } } document.addEventListener('DOMContentLoaded', addLogo) })()</script> </div> .h1.f-headline.fw1[ Bayesian Analysis ] .h2.f-subheadline.lh-title[ and Decision Theory ] <br><br> .f1[ Mixed (hierarchical) models ] .f3[ NAMV005<br> Lund 2022 ] --- # Course content |Topic|Format| Date | |-----|------|------| | Introduction to Bayesian inference and subjective probability <br> Conjugate models| Review <br> Lecture | 17 Jan 2022 | | Conjugate models (review and exercises) <br> Modern Bayesian analysis with MCMC | Review <br> Lecture | 24 Jan 2022 | | Modern Bayesian analysis with MCMC (review and exercises) <br> Hierarchical models | Review <br> Lecture | 31 Jan 2022 | | Hierarchical models (review and exercises) <br> Decision theory <br> Literature seminar | Review <br> Lecture <br> Seminar | 07 Feb 2022 | --- # Recap 1. Conjugate models. Globe tossing with beta-binomial model 1. Linear models. Gaussian model assumptions - Introduction to `brms` and the first model - Peeking under the hood. Our own first program in Stan. - Data wrangling and visualization of posterior draws. -- ## Today 1. Mixed models. Repeated measurements / hierarchical data. 1. Pooling. 1. Normal hierarchical model and beyond. 1. Adding more layers --- # Mixed models Up until now we worked under the iid data assumption. Sometimes samples in the data may not be independent because: + several measurements were collected from the same individual (repeated measurement data), or + surveyed individuals share some characteristics (e.g. group membership): pupils in class, athletes in clubs, citizens in countries, etc. ```r cherry50 <- read_csv("data/mdsr_cherry50.csv") cherry50 ``` ``` ## # A tibble: 461 × 8 ## name_yob age sex year previous nruns gun_time time ## <chr> <dbl> <chr> <dbl> <dbl> <dbl> <lgl> <dbl> ## 1 bennett beach 1950 49 M 1999 0 9 TRUE 77.4 ## 2 bennett beach 1950 50 M 2000 1 9 FALSE 63.5 ## 3 bennett beach 1950 52 M 2002 2 9 FALSE 67.3 ## 4 bennett beach 1950 53 M 2003 3 9 FALSE 62.1 ## 5 bennett beach 1950 54 M 2004 4 9 FALSE 65.2 ## 6 bennett beach 1950 55 M 2005 5 9 FALSE 70.1 ## 7 bennett beach 1950 56 M 2006 6 9 FALSE 72.9 ## 8 bennett beach 1950 57 M 2007 7 9 TRUE 76.7 ## 9 bennett beach 1950 58 M 2008 8 9 TRUE 93.7 ## 10 bernard kelly 1956 43 M 1999 0 10 TRUE 89.7 ## # … with 451 more rows ``` --- # Complete pooling .pull-left[ ```r ggplot(cherry50, aes(x=age, y=time))+ geom_point()+ geom_smooth(method = "lm")+ theme_minimal() ``` <!-- --> ] .pull-right[ Let's fit the simple Bayesian linear model `$$\begin{aligned}Y_i&\sim Normal(\mu_i, \sigma)\\ \mu_i&=\beta_0 + \beta_1X_i \end{aligned}$$` ```r pooled_mod <- brm(time~1+age, data=cherry50, family=gaussian(), refresh=0, silent=1, prior=c( prior(normal(0, 2.5), class=Intercept), prior(normal(0,2.5), class=b), prior(exponential(1), class=sigma))) pooled_mod ``` ] --- # To pool or not to pool? .pull-left[ ```r cherry50 %>% ggplot(aes(x=age, y=time, group=name_yob))+ geom_smooth(method = "lm", se=FALSE, color="grey50", size=0.5)+ geom_abline(data=summarise_draws(pooled_mod), aes(intercept = mean[1], slope=mean[2]), color="blue")+ theme_minimal() ``` <!-- --> ] .pull-right[ Hierarchy in the data: + all runners (not only in our data) + sample of 50 runners in the `cherry50` dataset Two sources of variability: + within-group + between-group ] --- # Pooled model (no predictors) `$$\begin{gathered}Y_{ij}\sim Normal(\mu, \sigma) \\ \mu \sim Normal(0,25)\\ \sigma \sim Exponential(1) \end{gathered}$$` ```r cherry50_pooled_mod <- brms::brm(time~1, data=cherry50, family = gaussian(), refresh=0, prior=c( prior(normal(0,25), class=Intercept), #assuming centering prior(exponential(1), class=sigma))) cherry50_pooled_mod ``` --- # Pooled model (no predictors) `$$\begin{gathered}Y_{ij}\sim Normal(\mu, \sigma) \\ \mu \sim Normal(0,25)\\ \sigma \sim Exponential(1) \end{gathered}$$` ```r cherry50_means <- cherry50 %>% group_by(name_yob) %>% summarise(age=mean(age), time=mean(time)) %>% arrange(time) cherry50_means_predictions <- posterior_predict(cherry50_pooled_mod, newdata=cherry50_means) ppc_intervals(cherry50_means$time, yrep = cherry50_means_predictions, prob_outer = 0.8)+ scale_x_continuous(labels=cherry50_means$name_yob, breaks = seq_len(nrow(cherry50_means)))+ xaxis_text(angle=90, hjust=1) ``` <!-- --> --- # No pooling model .pull-left[ `$$\begin{gathered}Y_{ij}\sim Normal(\mu_j, \sigma)\\ \mu_j \sim Normal(85, s_j)\\ \sigma \sim Exp(1) \end{gathered}$$` Unpooled model: + ignores information about about the running times of one athlete in predicting the running time of another + can not generalize beyond the runners in the sample.  ] .pull-right[ ```r cherry50_unpooled_mod <- brms::brm( time ~ 0+name_yob, data = cherry50, family = gaussian(), refresh=0, prior=c( prior(normal(85, 10), class=b), prior(exponential(1), class=sigma))) cherry50_unpooled_mod ``` <!-- --> ] --- # Hierarchical model `$$\begin{gathered} Y_{ij} \sim Normal(\mu_j, \sigma_y)\\ \mu_j \sim Normal(\mu, \sigma_\mu)\\ \mu \sim Normal(85,10)\\ \sigma_y \sim Exponential(0.5)\\ \sigma_\mu \sim Exponential(1) \end{gathered}$$` Layer 1: `\(Y_{ij}|\mu_j,\sigma_y\)` - models how running times vary *within* the runner `\(j\)` results - `\(\mu_j\)` is the mean running time for athlete `\(j\)` and - `\(\sigma_y\)` is the within-group variability, i.e. the standard deviation of running times from year to year within a single athlete. It is assumed to be the same for every athlete. Layer 2: `\(\mu_j|\mu, \sigma_\mu\)` - models how the typical running time `\(\mu_j\)` varies *between* the athletes - `\(\mu\)` is global average of the running times `\(\mu_j\)` across all athletes `\(j\)` and - `\(\sigma_\mu\)` is between-group variability, i.e SD of the mean running times `\(\mu_j\)` varyingfrom athlete to athlete Layer 3: `\(\mu, \sigma_y, \sigma_\mu\)` - prior model for shared (global) parameters - global parameters `\(\mu, \sigma_y\)` and `\(\sigma_\mu\)` shared by all Cherry Blossom Race athletes --- # Hierarchical model `$$\begin{gathered} Y_{ij} \sim Normal(\mu_j, \sigma_y)\\ \mu_j \sim Normal(\mu, \sigma_\mu)\\ \mu \sim Normal(85,10)\\ \sigma_y \sim Exponential(0.5)\\ \sigma_\mu \sim Exponential(1) \end{gathered}$$` This model has a name: (one-way) "ANOVA" (ah! no! wow!), because the variance (within-group and between-group) is split. Another way to think about the athlete-specific mean parameter `\(\mu_j\)` is in terms of "individual adjustments" `\(b_j\)` to the global mean `\(\mu\)`. `$$\begin{gathered} Y_{ij} \sim Normal(\mu_j, \sigma_y)\\ \mu_j = \mu + b_j\\ b_j \sim Normal(0, \sigma_\mu)\\ \dots \end{gathered}$$` The two forms of this model are equivalent. --- # Hierarchical model ```r #get_prior(time ~ (1|name_yob), family = gaussian(), data=cherry50) cherry50_hi_mod <- brms::brm( time ~ (1|name_yob), data=cherry50, family = gaussian(), refresh=0, prior=c(prior(normal(85,10), class=Intercept), prior(exponential(1), class=sd), prior(exponential(0.5), class=sigma)), iter = 5000, seed=42) cherry50_hi_mod ``` <!-- --> --- # Adding predictors .pull-left[ Complete pooling model for running time dependent on age. `$$\begin{gathered} Y_{ij} \sim Normal(\mu_i, \sigma)\\ \mu_i=\beta_0+\beta_1X_{ij}\\ \beta_0 \sim Normal(0,35)\\ \beta_1\sim Normal(0,15)\\ \sigma\sim Exponential(1) \end{gathered}$$` ```r #get_prior(time~age, data=cherry50) cherry50_age_pooled_mod <- brms::brm( time~age, data=cherry50, family = gaussian(), refresh=0, prior=c(prior(normal(0,35), class=Intercept), prior(normal(0,15), class=b), prior(exponential(1), class=sigma))) cherry50_age_pooled_mod ``` ] .pull-right[ ```r pp_check(cherry50_age_pooled_mod) ``` <!-- --> ```r tidy(cherry50_age_pooled_mod, effects = "fixed", conf.int = TRUE, conf.level = 0.80) # also try effects = "ran_pars" or "ran_vals" ``` ] --- # Varying intercepts .pull-left[ `$$\begin{gathered} Y_{ij}\sim Normal(\mu_{ij}, \sigma_y)\\ \mu_{ij}=\beta_{0j}+\beta_1X_{ij}\\ \beta_{0j} \sim Normal(\beta_0, \sigma_0)\\ \beta_0 \sim Normal(100,10)\\ \beta_1 \sim Normal(2.5, 1)\\ \sigma_y \sim Exponential(0.5)\\ \sigma_0 \sim Exponential(1) \end{gathered}$$` ```r cherry50_age_vi_mod <- brms::brm( time ~ age + (1|name_yob), data=cherry50, family = gaussian(), refresh=0, prior=c( prior(normal(100,10), class=Intercept), prior(normal(2.5,1), class=b), prior(exponential(0.5), class=sigma), prior(exponential(1), class=sd) ), iter = 5000, seed=42) cherry50_age_vi_mod ``` ] .pull-right[ **Layer 1**: Variability within the runner. Note that parameters `\(\beta_1\)` and `\(\sigma_y\)` are shared. **Layer 2**: Variability between the runners. Note that both `\(\beta_0\)` and `\(\sigma_0\)` are shared. ```r add_epred_draws(cherry50, cherry50_age_vi_mod, ndraws = 2) %>% ggplot(aes(x = age, y = time)) + geom_line(aes(y = .epred, group = paste(name_yob, .draw))) + facet_wrap(vars(.draw)) + theme_minimal() ``` <!-- --> ] --- # Variable slope and intercept .pull-left[ `$$\begin{gathered} Y_{ij}\sim Normal(\mu_{ij}, \sigma_y)\\ \mu_{ij}=\beta_{0j}+\beta_{1j}X_{ij}\\ \dbinom{\beta_{0j}}{\beta_{1j}}\sim Normal\left(\dbinom{\beta_0}{\beta_1}, \Sigma\right)\\ \beta_0 \sim Normal(100,10)\\ \beta_1 \sim Normal(2.5, 1)\\ \sigma_y \sim Exponential(0.5)\\ \Sigma \sim LKJ(1) \end{gathered}$$` ```r #get_prior(time ~ age + (age|name_yob), data=cherry50) cherry50_age_vsi_mod <- brms::brm( time ~ age + (age|name_yob), data=cherry50, family = gaussian(), refresh=0, prior=c( prior(normal(100,10), class=Intercept), prior(normal(2.5,1), class=b), prior(exponential(0.5), class=sigma), prior(exponential(1), class=sd), set_prior("lkj(1)", class="cor") ), iter = 5000, seed=42) cherry50_age_vsi_mod ``` ] .pull-right[ - Runner-specific *intercept* `\(\beta_{0j}\)` and *slope* `\(\beta_{1j}\)` work together describing the model for the runner `\(j\)`, hence they are correlated. We represent them with joint Normal model `\(\dbinom{\beta_{0j}}{\beta_{1j}}\)`, with some global mean `\(\dbinom{\beta_{0}}{\beta_{1}}\)` and global covariabce `$$\Sigma=\left(\begin{matrix} \sigma_0 & \rho\sigma_0\sigma_1\\ \rho\sigma_0\sigma_1 & \sigma_1 \end{matrix}\right)$$` Distribution of covariance matrices can be modeled with LKJ prior (decomposition of covariance). See Section 17.3.2 in the book for details (Johnson et al, 2022). ] --- #Posterior predictions ```r # Simulate posterior predictive models for the 3 runners set.seed(42) athletes <- c("bill rodgers 1948", "dmytro perepolkin", "carol lavrich 1956") predict_2009_race <- posterior_predict(cherry50_age_vsi_mod, newdata = data.frame(name_yob = athletes, age = c(61, 60, 53)), allow_new_levels = TRUE ) colnames(predict_2009_race) <- athletes predict_2009_race %>% as_draws_matrix() %>% mcmc_areas(prob = 0.8) ``` <!-- --> --- # Summary Pick your model - Pooled model: `time~1` - Unpooled model: `time~0+name_yob` - Partially pooled (hierarchical model): `time ~ (1|name_yob)` With predictors - Regression (no hierarchical structure): `time~1+age` - Pooled model: `time~age` - Varying intercepts: `time ~ age + (1|name_yob)` - Varying slopes and intercepts: `time ~ age + (age|name_yob)` --- # References Acree MC. 2021. The Myth of Statistical Inference [Internet]. Cham: Springer International Publishing; [accessed 2022 Jan 6]. https://doi.org/10.1007/978-3-030-73257-8 Bürkner P-C. 2017. Advanced Bayesian Multilevel Modeling with the R Package brms. arXiv:170511123 [stat] [Internet]. [accessed 2022 Jan 23]. http://arxiv.org/abs/1705.11123 Clayton A. 2021. Bernoulli’s fallacy: statistical illogic and the crisis of modern science. New York: Columbia University Press. Johnson AA, Ott MQ, Dogucu M. 2022. Bayes rules! an introduction to Bayesian modeling with R. Boca Raton: CRC Press. McElreath R. 2020. Statistical Rethinking: A Bayesian Course with Examples in R and Stan [Internet]. 2nd ed. New York, NY: Chapman and Hall/CRC; [accessed 2020 May 3]. https://doi.org/10.1201/9780429029608 Raiffa H, Schlaifer R. 2000. Applied statistical decision theory. Wiley classics library ed. New York: Wiley. Sahlin N-E. 1990. The philosophy of FP Ramsey. [place unknown]: Cambridge University Press. --- class: center, middle # Thanks! Slides created via the R package [**xaringan**](https://github.com/yihui/xaringan). The chakra comes from [remark.js](https://remarkjs.com), [**knitr**](https://yihui.org/knitr/), and [R Markdown](https://rmarkdown.rstudio.com).